Executive Summary

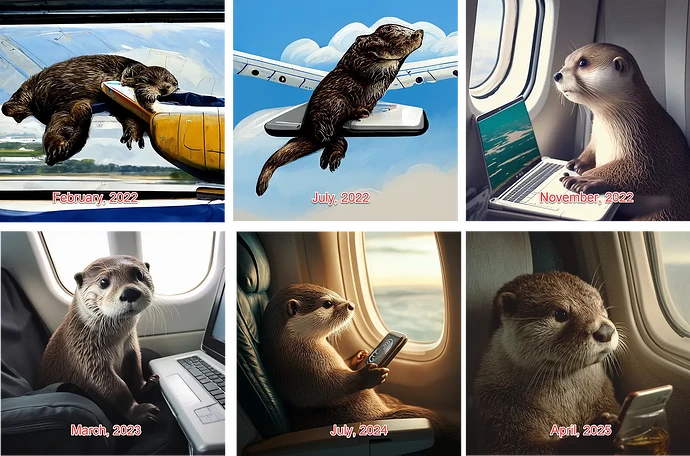

- The Three-Year Acceleration: Ethan Mollick’s otter evolution reveals AI’s progression from abstract blobs to photorealistic video with sound in under three years—capabilities that are already being weaponized

- Democratization Already Complete: Open-source models that can create deepfakes are available TODAY on home computers—not months away but right now

- Real-World Weaponization: The May 2025 India-Pakistan conflict saw deepfake videos spread to 700,000+ viewers within hours, with major media outlets broadcasting AI-generated content before fact-checkers could respond

- Beyond Detection: Universities have failed to reliably detect AI-generated content despite significant investment, with many abandoning detection efforts entirely

- Urgent Action Required: The humanitarian sector needs immediate protocols—the tools for our destruction are already freely downloadable

Introduction: When Otters Become Weapons

Last October, I wrote about Donald Trump sharing AI-generated images of Taylor Swift supporters, warning it was “a stark warning for the humanitarian sector.” By November, I was already conducting emergency training sessions with the Estonian Refugee Council on these emerging threats. Eight months later, reading Ethan Mollick’s “The recent history of AI in 32 otters”, I realize my warning was catastrophically understated.

Mollick’s whimsical benchmark—tracking AI’s ability to generate “otter on a plane using wifi”—reveals something terrifying: In less than three years, we’ve gone from abstract fur blobs to photorealistic videos with AI-generated sound. But here’s what should paralyze every humanitarian security manager: the open-source versions that anyone can run on a home computer are already here. Today. Right now.

As Mollick demonstrates, he created images using Flux and videos using HunyuanVideo on his personal computer. Yes, the quality is lower than Google’s Veo or OpenAI’s systems. But for creating deepfakes that can destroy humanitarian operations? “Good enough to fool” is all that’s needed.

The India-Pakistan Deepfake War: A Preview of Humanitarian Chaos

When Fiction Became “Fact” in Hours

The May 2025 India-Pakistan conflict provided a horrifying preview of what’s coming for humanitarian operations. A deepfake video of Pakistani General Ahmed Sharif Chaudhry falsely claiming Pakistan had lost two aircraft was shared nearly 700,000 times before being debunked. Several Indian media companies had already picked up and ran with the story, including large outlets like NDTV.

The sophistication was remarkable: Chaudhry’s lips appearing to sync with the altered audio, using footage from a 2024 press conference with new audio overlaid. This wasn’t crude manipulation—it was convincing enough that major news organizations broadcast it as fact.

The Speed of Deception

What makes this relevant for humanitarian operations is the velocity of spread versus verification. The emergence of artificial intelligence technology loomed large. AI-generated disinformation—including deepfake videos depicting a Pakistani military officer acknowledging the loss of jets and U.S. President Donald Trump vowing to “erase Pakistan”—supplemented other false reports.

The timeline is crucial: these fakes spread to hundreds of thousands within hours, while fact-checking took days. In a humanitarian context, where operational decisions happen in real-time, this gap is could be lethal.

Personal Confession: Even I Was Fooled

I need to be honest here. During the height of the conflict, scrolling through my feeds, I momentarily believed one of the AI-generated satellite images showing destroyed military installations. The quality was that good. It took deliberate investigation to realize the image enhanced with AI was manipulated. If someone with my background in both AI and humanitarian operations can be fooled, even briefly, what chance do field teams have under pressure?

From Abstract to Arsenal: The Three-Year Timeline

Mollick’s timeline, viewed through a humanitarian security lens, is terrifying:

2022: “Melted fur” - AI images were obviously fake, easily dismissed 2023: Photorealistic still images emerge - the credibility crisis begins 2024: High-quality images become trivial to produce 2025: Photorealistic video with synchronized AI-generated audio - available on home computers

Each leap represents an exponential increase in threat potential. What took Hollywood studios millions of dollars and months of work three years ago can now be done on a laptop or even a smart phone form five years ago in minutes.

The Open-Source Reality Check

Mollick’s most critical observation deserves emphasis: open-source models aren’t “coming soon”—they’re here. He demonstrates this with:

- Flux: Creating high-quality images on his home computer

- HunyuanVideo: Generating videos (albeit “hideous” quality) on personal hardware

For humanitarian operations, this means:

- Zero barriers: Anyone with a basic computer or smart phone can create damaging deepfakes today

- No control possible: Open-source tools can’t be regulated or shut down

- Quality sufficient for damage: Even “lower quality” is good enough to fool stressed observers

The Escalation in Sector Awareness

The escalation in concerns is palpable. From my November training with the Estonian Refugee Council where deepfakes were a “future threat,” to last week’s Caritas Switzerland workshop where teams were actively worried, to yesterday’s OCHA Afghanistan briefing where it’s now an operational priority—the timeline matches Mollick’s otter evolution perfectly.

Real Threats in Real Operations

The Sudan Voice Cloning Scenario

During my AI implementation workshop with Caritas Switzerland’s Lebanon and Syria teams last week—and reinforced in yesterday’s briefing with OCHA Afghanistan—I described a team in Sudan expressing deep concern about voice cloning. Their fear? Someone could clone their team leader’s voice and distribute WhatsApp messages claiming they support one side of the conflict.

This isn’t hypothetical. It’s technically trivial today, requiring:

- Less than 30 seconds of source audio (from any public speech or video)

- Free, downloadable software

- About 10 minutes of processing time

- A basic laptop

Escalation to Video: The New Nightmare

The India-Pakistan conflict showed us the future. Fake Indian defence personnel profiles were also deployed, and fabricated stories spread faster than truth. Now imagine these capabilities applied to humanitarian contexts:

- Fabricated evidence of aid diversion: Video “proof” of food distributions going to armed groups

- Manufactured neutrality violations: Synthetic footage of humanitarian vehicles at military checkpoints

- Fake accountability failures: Generated videos of aid workers accepting bribes

- Compromised negotiations: Deepfake footage of humanitarian leaders making inflammatory statements

Each scenario would have been science fiction three years ago. Each is achievable on a laptop today.

The Detection Delusion

During my Caritas Switzerland workshop last week, participants asked about AI detection tools. The same question came up in yesterday’s OCHA Afghanistan briefing. My response was blunt: universities have failed to reliably detect AI-generated content despite investing significant resources. As I explained to the teams, “there’s been an arms race between universities and students where universities were trying to develop ways to detect if these essays were AI written, but they have not been able to succeed.”

Why Detection Failed Catastrophically

The evidence is overwhelming:

- AI detection tools “do not work” and it’s "an arms race between generation and detection…detection tool companies cannot hope to win"

- Major institutions including Montclair State University, Vanderbilt University, the University of Texas at Austin and Northwestern University have announced that academics should not use AI-detector features

- Research shows “humans can detect AI-written work about as accurately as predicting a coin toss — meaning poorly”

The India-Pakistan case study proves detection doesn’t work at scale:

- Visuals being shared online as evidence of fighting included those of a February plane crash in Philadelphia and images from the Israel-Gaza war

- Social media platforms could not keep up with the stream of misinformation and fake news

- Major media outlets broadcast deepfakes before detection occurred

Mollick reinforces this technical reality: when AI can reason about spatial relationships from scratch (his TikZ drawing example), it’s creating genuinely novel content. There’s no pattern to detect—each creation is unique.

Humanitarian Implications: Beyond Technical Solutions

The “Liar’s Dividend” in Crisis Zones

As I warned in October, the “liar’s dividend”—the ability to dismiss real evidence as potentially fake—becomes weaponized in humanitarian contexts. During the India-Pakistan conflict, Confronted with frequent lies, people are more likely to be skeptical about accurate news.

For humanitarian operations, this means:

- Genuine evidence of atrocities can be dismissed as “deepfakes”

- Accountability mechanisms break down when nothing can be verified

- Trust evaporates when communities can’t distinguish real from synthetic

Speed Kills: The Verification Gap

The India-Pakistan timeline revealed a fatal gap: misinformation trickles down “from X to WhatsApp, which is the communication tool which is most used in South Asian communities”. In humanitarian contexts, this cascade happens even faster:

Hour 1: Deepfake created and posted Hour 2-4: Spreads through social media Hour 4-8: Reaches WhatsApp groups and local networks Hour 8-24: Operational decisions made based on false information Day 2-3: Fact-checkers begin debunking Day 3+: Damage already done, trust destroyed

Practical Protocols for Resource-Constrained Teams

Recognizing the Reality: Do What You Can With What You Have

The India-Pakistan experience showed that Fake Indian defence personnel profiles were deployed alongside deepfakes. For overstretched humanitarian teams already dealing with budget cuts and staff losses, here’s what’s actually feasible:

1. Basic Verification Habits (No Tech Required)

- The 10-Second Rule: If shocking news arrives via WhatsApp/social media, wait 10 seconds before reacting. Ask: “Who benefits if I believe this?”

- Source Check: For critical information, always ask for a second source through a different channel (if WhatsApp, confirm via phone; if video, confirm via trusted local contact)

- Trust Your Gut: If something feels “too perfect” or “too shocking,” it probably is. Your field experience matters

2. Simple Senior Management Briefing Points Train leadership to recognize red flags:

- Sudden “leaked” videos showing your organization violating neutrality

- Audio messages from colleagues saying things completely out of character

- “Evidence” that arrives just when it would cause maximum damage

- Any content pushing you to make immediate, irreversible decisions

3. The Minimum Viable Response Plan With limited resources, focus only on:

- One designated person who monitors for synthetic media threats (can be part-time)

- One pre-written statement ready to deploy: “We are aware of content circulating about our organization. We are investigating and will respond through official channels only.”

- One simple rule: All major operational decisions require confirmation through two different communication methods

4. What Field Teams Actually Need to Know Skip the complex protocols. Tell them:

- Voice messages can be faked with 30 seconds of recorded speech

- Videos can be manipulated to show you at places you’ve never been

- If accused of something via synthetic media, immediately inform HQ

- Document your actual location/activities (even simple written logs help)

5. Zero-Cost Protection Measures

- Use existing code words your team already knows

- Leverage your existing local networks - they’re your best verification system

- When in doubt, fall back on face-to-face meetings for critical decisions

- Keep doing what you’re already good at: building trust with communities who can vouch for you

For Organizations With No Budget for New Tools

Focus on the highest-risk scenarios only:

- Fake evidence of aid diversion that could shut down your programs

- Synthetic content that could endanger staff safety

- Deepfakes that could destroy community trust overnight

The 80/20 rule applies: 80% of protection comes from basic awareness and simple protocols, not expensive technology.

The Hard Truth About Detection

As the India-Pakistan conflict showed, even major media outlets couldn’t detect sophisticated fakes in time. Your field team won’t be able to either. Instead of trying to become detection experts, focus on:

- Building relationships that can survive synthetic media attacks

- Creating operational habits that don’t rely on digital evidence alone

- Preparing simple responses for when (not if) you’re targeted

Why This Can’t Wait: The Acceleration Factor

Mollick’s observation about exponential progress is crucial. The India-Pakistan conflict showed us what happens when these tools are weaponized. Secretary of State Marco Rubio spoke to officials from both India and Pakistan trying to prevent escalation, but misinformation had already poisoned the well.

For humanitarian operations, waiting means:

- Every month, tools become more sophisticated

- Every week, more bad actors gain access

- Every day, our vulnerabilities multiply

- Every hour increases the chance of a catastrophic incident

Moving Forward: From Awareness to Action

At MarketImpact, we’ve been tracking these developments closely, integrating synthetic media awareness into our digital transformation work with humanitarian organizations. While technology alone isn’t the answer, understanding the threat landscape is crucial for developing practical protocols that work within existing constraints.

A Call for Emergency Action

My October post called for dialogue. Today, with the India-Pakistan conflict as proof and Mollick’s timeline as evidence, I’m calling for emergency protocols:

This Week - Crisis Mode:

- Security Briefing: One-page warning to all senior staff about deepfake threats

- Basic Protocols: Implement the 10-second rule and two-source verification

- Statement Ready: Draft your single emergency response statement

- Document Current Activities: Start simple logs of where your teams actually are

This Month - Building Resilience:

- Staff Awareness: 15-minute briefings for all staff on basic threats

- Local Network Mapping: Identify trusted community voices who can vouch for you

- Practice Scenarios: Run one simple drill - what if fake video surfaces tomorrow?

- Share Intelligence: Connect with other organizations in your area about threats

This Quarter - Sustainable Protection:

- Integrate Into Existing Security Protocols: Don’t create new systems, adapt what you have

- Community Engagement: Ensure local leaders know about synthetic media threats

- Document Lessons: When incidents occur, share what worked with the sector

- Advocate for Support: Push donors to recognize this as a legitimate security cost

Join the Urgent Conversation at AidGPT.org

I created AidGPT.org as an open space for critical discussions about AI’s impact on humanitarian work. What began as a platform for exploring AI’s potential has evolved to address existential threats—from the USAID funding freeze to this deepfake crisis.

This isn’t just another forum—it’s a safe space for open, honest discussion about threats that many organizations are reluctant to discuss publicly. Whether you’ve encountered suspicious content, developed workable protocols, or simply want to learn from others’ experiences, your voice is needed in this conversation.

Conclusion: The Otter Has Landed

Mollick ends with AI-generated otters performing “like the musical Cats”—absurd but completely convincing. It’s the perfect metaphor: we now live in a world where the absurd can be made believable in minutes, where evidence itself has become unreliable.

The India-Pakistan conflict wasn’t a distant warning—it was a preview of the chaos coming for humanitarian operations. When a deepfake can reach 700,000 people faster than fact-checkers can type, when major media outlets broadcast synthetic content as truth, when even experts are briefly fooled, we’ve entered a new era.

The humanitarian sector has always depended on trust—from communities, donors, and partners. Synthetic media doesn’t just threaten our operations; it threatens to shatter the very foundation of humanitarian action.

My October warning wasn’t just premature—it was dangerously understated. We don’t have months to prepare. The tools are already here, freely downloadable, actively being used. The India-Pakistan conflict showed us the playbook. The only question is whether we’ll be ready when—not if—these weapons are turned on us.

But readiness doesn’t require expensive technology or complex protocols. It requires awareness, simple habits, and the relationships we’ve always relied on. In a world where seeing is no longer believing, our greatest protection remains what it’s always been: the trust we’ve built with communities and each other.

The otters have evolved. The question is: have we?

What synthetic media threats are you seeing? What simple protocols are working for your team? Have you encountered suspicious content targeting humanitarian operations? Join the urgent discussion at AidGPT.org or share below.

#HumanitarianAI #Deepfakes #IndiaPakistanConflict #AidSecurity #SyntheticMedia #HumanitarianTech #AIEthics #DigitalSecurity #AidGPT #HumanitarianInnovation #CrisisResponse #AIRisk #TrustInAid #EmergencyProtocols #HumanitarianSecurity